This post is co-written with Matt Marzillo from Snowflake.

Today, we are excited to announce that the Snowflake Arctic Instruct model is available through Amazon SageMaker JumpStart to deploy and run inference. Snowflake Arctic is a family of enterprise-grade large language models (LLMs) built by Snowflake to cater to the needs of enterprise users, exhibiting exceptional capabilities (as shown in the following benchmarks) in SQL querying, coding, and accurately following instructions. SageMaker JumpStart is a machine learning (ML) hub that provides access to algorithms, models, and ML solutions so you can quickly get started with ML.

In this post, we walk through how to discover and deploy the Snowflake Arctic Instruct model using SageMaker JumpStart, and provide example use cases with specific prompts.

What is Snowflake Arctic

Snowflake Arctic is an enterprise-focused LLM that delivers top-tier enterprise intelligence among open LLMs with highly competitive cost-efficiency. Snowflake is able to achieve high enterprise intelligence through a Dense Mixture of Experts (MoE) hybrid transformer architecture and efficient training techniques. With the hybrid transformer architecture, Artic is designed with a 10-billion dense transformer model combined with a residual 128×3.66B MoE MLP resulting in a total of 480 billion parameters spread across 128 fine-grained experts and uses top-2 gating to choose 17 billion active parameters. This enables Snowflake Arctic to have enlarged capacity for enterprise intelligence due to the large number of total parameters and simultaneously be more resource-efficient for training and inference by engaging the moderate number of active parameters.

Snowflake Arctic is trained with a three-stage data curriculum with different data composition focusing on generic skills in the first phase (1 trillion tokens, the majority from web data), and enterprise-focused skills in the next two phases (1.5 trillion and 1 trillion tokens, respectively, with more code, SQL, and STEM data). This helps the Snowflake Arctic model set a new baseline of enterprise intelligence while being cost-effective.

In addition to the cost-effective training, Snowflake Arctic also comes with a number of innovations and optimizations to run inference efficiently. At small batch sizes, inference is memory bandwidth bound, and Snowflake Arctic can have up to four times fewer memory reads compared to other openly available models, leading to faster inference performance. At very large batch sizes, inference switches to being compute bound and Snowflake Arctic incurs up to four times fewer compute compared to other openly available models. Snowflake Arctic models are available under an Apache 2.0 license, which provides ungated access to weights and code. All the data recipes and research insights will also be made available for customers.

What is SageMaker JumpStart

With SageMaker JumpStart, you can choose from a broad selection of publicly available foundation models (FM). ML practitioners can deploy FMs to dedicated Amazon SageMaker instances from a network isolated environment and customize models using SageMaker for model training and deployment. You can now discover and deploy Arctic Instruct model with a few clicks in Amazon SageMaker Studio or programmatically through the SageMaker Python SDK, enabling you to derive model performance and machine learning operations (MLOps) controls with SageMaker features such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The model is deployed in an AWS secure environment and under your virtual private cloud (VPC) controls, helping provide data security. Snowflake Arctic Instruct model is available today for deployment and inference in SageMaker Studio in the us-east-2 AWS Region, with planned future availability in additional Regions.

Discover models

You can access the FMs through SageMaker JumpStart in the SageMaker Studio UI and the SageMaker Python SDK. In this section, we go over how to discover the models in SageMaker Studio.

SageMaker Studio is an integrated development environment (IDE) that provides a single web-based visual interface where you can access purpose-built tools to perform all ML development steps, from preparing data to building, training, and deploying your ML models. For more details on how to get started and set up SageMaker Studio, refer to Amazon SageMaker Studio.

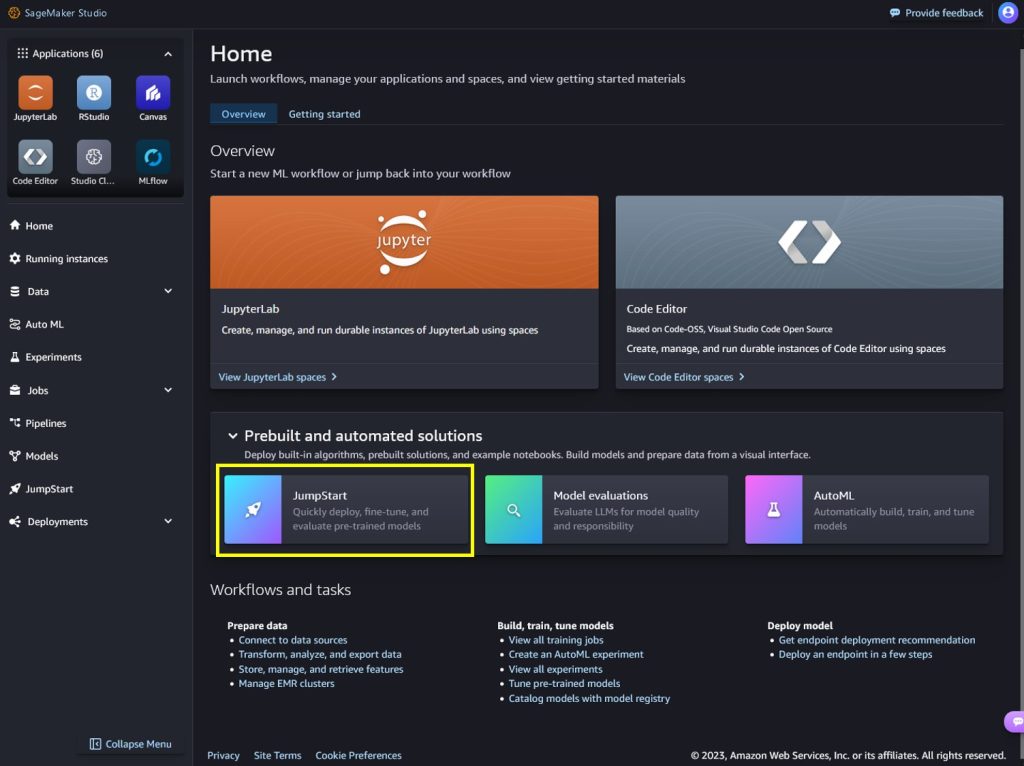

In SageMaker Studio, you can access SageMaker JumpStart, which contains pre-trained models, notebooks, and prebuilt solutions, under Prebuilt and automated solutions.

From the SageMaker JumpStart landing page, you can discover various models by browsing through different hubs, which are named after model providers. You can find Snowflake Arctic Instruct model in the Hugging Face hub. If you don’t see the Arctic Instruct model, update your SageMaker Studio version by shutting down and restarting. For more information, refer to Shut down and Update Studio Classic Apps.

You can also find Snowflake Arctic Instruct model by searching for “Snowflake” in the search field.

You can choose the model card to view details about the model such as license, data used to train, and how to use the model. You will also find two options to deploy the model, Deploy and Preview notebooks, which will deploy the model and create an endpoint.

Deploy the model in SageMaker Studio

When you choose Deploy in SageMaker Studio, deployment will start.

You can monitor the progress of the deployment on the endpoint details page that you’re redirected to.

Deploy the model through a notebook

Alternatively, you can choose Open notebook to deploy the model through the example notebook. The example notebook provides end-to-end guidance on how to deploy the model for inference and clean up resources.

To deploy using the notebook, you start by selecting an appropriate model, specified by the model_id. You can deploy any of the selected models on SageMaker with the following code:

This deploys the model on SageMaker with default configurations, including the default instance type and default VPC configurations. You can change these configurations by specifying non-default values in JumpStartModel. To learn more, refer to API documentation.

Run inference

After you deploy the model, you can run inference against the deployed endpoint through the SageMaker predictor API. Snowflake Arctic Instruct accepts history of chats between user and assistant and generates subsequent chats.

predictor.predict(payload)

Inference parameters control the text generation process at the endpoint. The max new tokens parameter controls the size of the output generated by the model. This may not be the same as the number of words because the vocabulary of the model is not the same as the English language vocabulary. The temperature parameter controls the randomness in the output. Higher temperature results in more creative and hallucinated outputs. All the inference parameters are optional.

The model accepts formatted instructions where conversation roles must start with a prompt from the user and alternate between user instructions and the assistant. The instruction format must be strictly respected, otherwise the model will generate suboptimal outputs. The template to build a prompt for the model is defined as follows:

<|im_start|>system

{system_message} <|im_end|>

<|im_start|>user

{human_message} <|im_end|>

<|im_start|>assistantn

<|im_start|> and <|im_end|> are special tokens for beginning of string (BOS) and end of string (EOS). The model can contain multiple conversation turns between system, user, and assistant, allowing for the incorporation of few-shot examples to enhance the model’s responses.

The following code shows how you can format the prompt in instruction format:

<|im_start|>usern5x + 35 = 7x -60 + 10. Solve for x<|im_end|>n<|im_start|>assistantn

In the following sections, we provide example prompts for different enterprise-focused use cases.

Long text summarization

You can use Snowflake Arctic Instruct for custom tasks like summarizing long-form text into JSON-formatted output. Through text generation, you can perform a variety of tasks, such as text summarization, language translation, code generation, sentiment analysis, and more. The input payload to the endpoint looks like the following code:

The following is an example of a prompt and the text generated by the model. All outputs are generated with inference parameters {“max_new_tokens”:512, “top_p”:0.95, “temperature”:0.7, “top_k”:50}.

The input is as follows:

We get the following output:

Code generation

Using the preceding example, we can use code generation prompts as follows:

The preceding code uses Snowflake Arctic Instruct to generate a Python function that writes a JSON file. It defines a payload dictionary with the input prompt “Write a function in Python to write a json file:” and some parameters to control the generation process, like the maximum number of tokens to generate and whether to enable sampling. It sends this payload to a predictor (likely an API), receives the generated text response, and prints it to the console. The printed output should be the Python function for writing a JSON file, as requested in the prompt.

The following is the output:

This will create a file named `output.json` in the same directory as your Python script, and write the `data` dictionary to that file in JSON format.

The output from the code generation defines the write_json that takes the file name and a Python object and writes the object as JSON data. The output shows the expected JSON file content, illustrating the model’s natural language processing and code generation capabilities.

Mathematics and reasoning

Snowflake Arctic Instruct also report strength in mathematical reasoning. Let’s use the following prompt to test it:

The following is the output:

The preceding code shows Snowflake Arctic’s capability to comprehend natural language prompts involving mathematical reasoning, break them down into logical steps, and generate human-like explanations and solutions.

SQL generation

Snowflake Arctic Instruct model is also adept in generating SQL queries based on natural language prompting and their enterprise intelligent training. We test that capability with the following prompt:

The following is the output:

The output shows that Snowflake Arctic Instruct inferred the specific fields of interest in the tables and provided a slightly more complex query that involves joining two tables to get the desired result.

Clean up

After you’re done running the notebook, delete all resources that you created in the process so your billing is stopped. Use the following code:

When deploying the endpoint from the SageMaker Studio console, you can delete it by choosing Delete on the endpoint details page.

Conclusion

In this post, we showed you how to get started with Snowflake Arctic Instruct model in SageMaker Studio, and provided example prompts for multiple enterprise use cases. Because FMs are pre-trained, they can also help lower training and infrastructure costs and enable customization for your use case. Check out SageMaker JumpStart in SageMaker Studio now to get started. To learn more, refer to the following resources:

Getting started with Amazon SageMaker JumpStart

Train, deploy, and evaluate pretrained models with SageMaker JumpStart

JumpStart Foundation Models

About the Authors

Natarajan Chennimalai Kumar – Principal Solutions Architect, 3P Model Providers, AWS

Pavan Kumar Rao Navule – Solutions Architect, AWS

Nidhi Gupta – Sr Partner Solutions Architect, AWS

Bosco Albuquerque – Sr Partner Solutions Architect, AWS

Matt Marzillo – Sr Partner Engineer, Snowflake

Nithin Vijeaswaran – Solutions Architect, AWS

Armando Diaz – Solutions Architect, AWS

Supriya Puragundla – Sr Solutions Architect, AWS

Jin Tan Ruan – Prototyping Developer, AWS